Data automation tools allow users to extract and enter data automatically. They can save you time and help promote greater accuracy in your workflow by reducing or eliminating human errors. There are many different types of data automation software, each of which may be useful for different types of businesses with additional needs. As such, it’s essential to explore your options when choosing between tools or platforms.

Data automation companies offer this software. It is often scalable, meaning that it can grow alongside your business — users can access what they need when they need it. For example, if you’re a small business just starting out, you might have limited data entry needs. As your business grows, however, you may require additional help. This is where data automation software can come in handy.

Data automation benefits vary depending on the specific tool you’re using, but generally speaking, here’s what you can expect when automating the data entry process:

Increased efficiency

Improved accuracy

More flexible scalability

Reduced costs

Better security

Help with decision-making

Help with various data automation projects

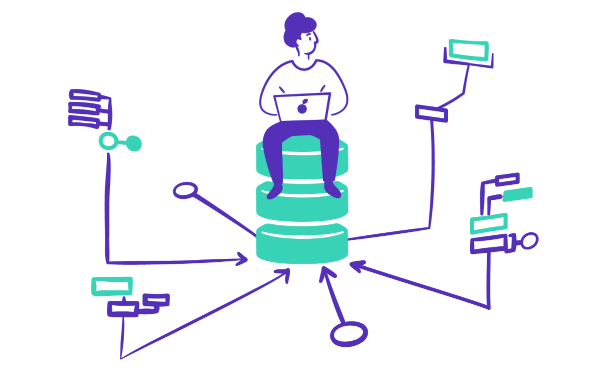

Most data automation tools use ETL. ETL stands for extract-transform-load. ETL is the process used to extract data from different sources, transform that data into a usable and trusted resource, and load it into systems that end users can access and use downstream to solve business problems.

By incorporating ETL tools into your workflow, you can reap the benefits of automation and get through tasks more quickly and efficiently. Rather than having to input data by hand, which can be tedious and time-consuming, or manually copy-and-pasting from CSVs you download from each of your data sources with their different formatting methods, you can do so automatically.

Data automation techniques are methods used to automate the data collected, stored, and analyzed by computer systems. These techniques use software and algorithms to reduce the manual effort required in managing and creating data. Examples of data automation techniques include automated data collection, automated data processing, automated data analysis, and automated data storage.

Different types of data automation techniques can reduce the amount of time and resources required in managing and creating data by automating routine processes, so it’s important to explore various techniques to find what works best for your business. For instance, automated data collection can help users capture large amounts of data quickly and accurately. Data automation tools for processing can quickly analyze data and identify patterns and trends.

Different types of data automation techniques can reduce the amount of time and resources required in managing and creating data by automating routine processes, so it’s important to explore various techniques to find what works best for your business. For instance, automated data collection can help users capture large amounts of data quickly and accurately. Data automation tools for processing can quickly analyze data and identify patterns and trends.

Data pipeline automation can mean different things to different users. For some, this is simply the process of automating the steps involved in the extraction, transformation, and loading of data. The name for this process is ETL automation. In this case, data pipeline automation is used to streamline the entire ETL process. It generally utilizes an ETL automation framework and ETL automation testing tools, allowing for faster and more efficient data processing. At the extraction stage, the data is pulled from a variety of sources such as product databases, ad platforms, CRMs, payment solutions, and more.

The data is then transformed into a clean, usable format that can be loaded into a data warehouse or other data storage system. This transformation typically includes data cleansing, formatting, aggregating, and mapping. Depending on the needs of the user, this can occur before or after data is loaded into the data warehouse. This process can also be automated — once this process is set up, it can be scheduled to run as needed.

In addition to automating the steps of the ETL process, data pipeline automation can also be used to automate tasks such as lineage, monitoring, and alerting. Scheduling allows for the ETL process to be run regularly. Monitoring ensures that the data is being processed correctly and that there are no errors or issues. Alerting allows for notifications to be sent out in the event of an issue or error. Some users also set up alerts to notify them of an outcome that is not incorrect, but would be notable. This might include an alert when a table hits a certain number of rows (to monitor data costs) or if some sort of milestone was hit, such as a large sale.

As alluded to previously, automated testing plays a critical role in ensuring the quality of data. But what is data testing? More specifically, what is ETL automation testing? Data testing is a process used to evaluate the quality of data that is inputted into a system. It is typically used to ensure that data is accurate, complete, and compliant with the system’s requirements.

ETL automation testing, by extension, is a type of data testing that focuses on testing the ETL process. It involves extracting data from a source system, transforming it into a format suitable for the target system, and then loading it into the target system. Data testing automation tools are used to ensure that the data is properly transformed and loaded into the target system and that it is accurate and complete. Users can use their unique knowledge to test for certain outcomes that they know is likely to signal an error. This is where an understanding of the business can make data work much more valuable.

ETL automation tools can greatly enhance your data extraction workflow. Automating the ETL data pipeline requires very minimal work. Once the pipeline is set up, the process can be scheduled to run when needed. Most of these tools operate within an ETL automation framework and follow a standard set of procedures to ensure quality and accuracy. A company might want some data sources to update daily, hourly, or even more frequently. Other sources might need to only be synced weekly or monthly. The data pipeline can be set up for these specific needs and in turn, data transformations need only occur when the tables they depend on have new data.

Still, getting started with such tools can be difficult for beginners, which is why it can be helpful to view an ETL automation testing tutorial or to work with an expert at the company providing the tool.

When looking for the best ETL testing tools, you should consider your business needs and how you plan on monitoring data lineage. Mozart Data uses Fivetran and Portable (ETL/ELT tools). The platform’s pipeline management and data lineage tools allow users to manage the data extraction process more efficiently. While data management can be tricky even under the best of circumstances, platforms like Mozart Data make it easy for users to centralize their data so that they can find what they need when they need it and gain full visibility into their pipelines. That visibility saves teams times, so they can focus on data work, not pipeline maintenance.

Data warehouse automation tools are typically related to other data pipeline tools, like automated ETL. One might refer to a “data warehouse automation tool” when speaking about their entire data pipeline automation process if they view the warehouse as the center of that process. For these users, they’re referring to automating the collection, storage, and analysis of data from multiple sources. They’re also likely referring to the system of queries, or data transformations, that make that data valuable to the company.

Sometimes the output of a data warehouse is still a sheet, whether that be an Excel spreadsheet or a Google Sheet. These tools are still incredibly popular, with users ranging from those just starting to work with data professionally to experienced data analysts and data scientists. They’re also very popular for people with a background in finance who want to work with data, even if they’ve moved into other roles at an organization.

However, Excel is not without its downsides. One of the main reasons why it can be difficult to automate data with Excel is that Excel is primarily a tool for data manipulation and analysis rather than data warehousing. Excel is not designed to handle large and complex data sets, and it can become slow and unresponsive when forced to deal with large amounts of data. It is also a desktop application, meaning it can be difficult to scale and share data across multiple users and departments.

While viewing Excel automation examples and learning how to automate Excel spreadsheets can certainly aid in your data warehousing process, Excel should not be your end-all be-all in terms of data automation. Excel is most valuable when connected to a system with data extraction, data warehousing, and data transformation capabilities. In this scenario, the process of feeding data into Excel or Google Sheets can be automated and these tools can be used for what they are truly intended to do.

You can use tools like Mozart alongside Excel for even better results. For example, with Mozart, you don’t have to manually download CSV files, copy and paste them over and over again, and try to perform impactful analysis.

Instead of trying to force Excel to do everything, you should aim to automate your data work and push it into a web-based business intelligence (BI) tool or Google Sheets. You might also download a CSV of a cleaned-up data set and work with that in the Excel platform instead. Mozart’s data warehouse and data observability tools allow for greater centralization of data so that you don’t have to constantly switch between tools to manage your pipeline. By doing so, you can more quickly identify and solve problems, leading to better business outcomes across the board.

Resources