Data Integration and Transformation Tools

Making scattered data useful for analysis

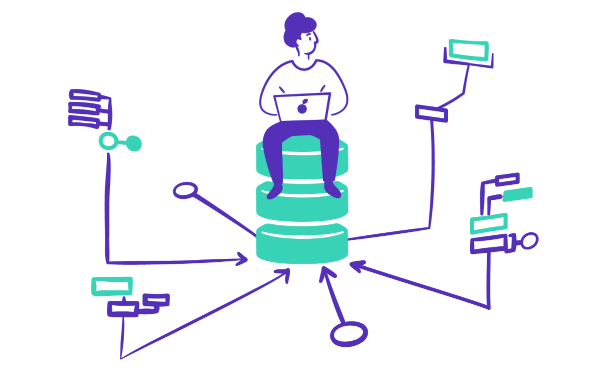

Data integration and transformation tools play a critical role in data management today. But what is data integration? What is data transformation? And why do I want to integrate data? Data integration is the process of combining data from multiple sources into a single, unified view. This can involve integrating data from different databases, file systems, or other sources like ad platforms into a single system or database, or it can involve creating a standard data model or schema that can be used to access data from multiple sources.

Data transformation is the process of converting data from one format or structure to another in order to make it suitable for a specific purpose or use case. This typically involves:

Converting data from one file format to another (e.g., CSV to JSON)

Changing the structure of data, such as flattening nested fields or aggregating data

Combining data from multiple sources into one table and unifying conventions for complex analysis

Mapping data elements from one schema or data model to another

Applying calculations, such as those used to create new variables or metrics

Cleaning data by removing or replacing invalid, missing, or duplicate data.

The goal of data transformation is to make data usable, accessible, and meaningful for various downstream systems, applications, and analyses. This is often done during the data integration process as a way to ensure that data from multiple sources can be combined and used together effectively.

Data integration and transformation enable complicated and impactful analysis. Siloed data makes it difficult to perform in-depth analysis, which is why it’s important to centralize and dig deeper into data to get the most out of it. SQL-based tools and DBT are the two tools that are most used for data transformation.

SQL-based tools are tools that primarily use SQL (Structured Query Language) as the primary means of interacting with databases. These tools include things like SQL editors, SQL clients, and SQL management systems. DBT (data build tool), by contrast, is a command line tool that is used to perform data transformation. It provides a way to write, organize and test data transformation code, primarily written in SQL.

To find the best data transformation tool for your business, it can be helpful to browse a data integration and transformation tools list.

Data integration tools are used to bring data from multiple sources together into a single, unified view. These tools can be used to extract data from various sources, transform the data to a standard format, and load the data into a target system or database. There are a variety of data integration tools available, each with its own strengths and weaknesses. For example, with open-source data integration tools, you can modify and distribute the source code — although most users don’t have the need for that level of complexity.

Cloud-based data integration tools exist in the cloud and can thus be an excellent option for those that want to be able to access their tools conveniently from any number of locations. Browsing a data integration tools list can give you an idea of the best data integration tools available today. By studying software integration examples, you can more easily narrow your options to the top integration tools or data integration software.

One of the most popular types of data integration tools is Extract, Transform, Load, or ETL tools. These tools are designed to extract data from a variety of sources, transform the data into a common format, and load the data into a target system or database. Some of the best ETL tools include:

Modern data pipeline architecture consists of several elements, each of which is designed to help data move through the pipeline at a steady pace. Key elements include:

Fivetran

Talend

Portable

Stitch

Hevo Data

It’s worth noting, however, that some drag-and-drop or “no code” ETL tools, which promise to make data integration easy for non-technical users, are not always the best option for real-world data integration projects. The main reason for this is that drag-and-drop tools tend to lack the flexibility and scalability needed to handle complex data integration scenarios. For example, if you work with big data, you would specifically need to look at ETL tools for big data, and these can be harder to find than more basic tools. It’s worth asking a provider about setting up data connectors (which can typically be accomplished with a no-code tool) and additional capabilities to make sure you’re choosing a tool that can keep up with your needs.

Another type of data integration tool is an Enterprise Service Bus (ESB). An ESB is an integration platform that allows different systems and applications to communicate with one another, regardless of the underlying technologies. The following are some of the best ESB tools around today:

Mule ESB

IBM WebSphere ESB

Oracle Service Bus

Microsoft Biztalk Server

Mozart helps make the data integration process easier, taking things a step beyond standard drag-and-drop tools and allowing for greater centralization and visibility. You can easily connect over 400 data sources and analyze data using the business intelligence tool of your choice. Mozart doesn’t require any coding to start the integration process, making it a great option for those that are looking for something they can get started with right away.

Data transformation tools are used to convert data from one format or structure to another in order to make it suitable for a specific purpose or use case. These tools are commonly used in data integration, data warehousing, and data analytics projects to convert, clean, and reshape data so that it can be used effectively by downstream systems, applications, and analysis.

Data transformation tools are used to perform a variety of tasks, such as:

Data mapping: This process involves mapping data elements from one schema or data model to another. It is often done when integrating data from different sources that use different data models or schemas. It is related to combining data.

Data cleansing: Data cleansing (or data cleaning) removes or replaces invalid, missing, or duplicate data. This is often done to ensure that the data is accurate and consistent.

Data validation: Data validation involves checking data for errors, inconsistencies, or missing values and then either correcting or flagging them. This step is important in ensuring data quality.

Data aggregation: When data is combined from multiple sources or records into a single value or record, it is called data aggregation. The process plays a crucial role in summarizing data and creating new variables or metrics. A practical example would be combining data from ad platforms, CRMs, and payments processing solutions to build a complete view of a customer.

Data masking or anonymization: This process involves obscuring sensitive information in data, such as personal identification numbers or credit card numbers. It is vital to ensure data privacy and security. Data hashing can be used to anonymize data, where a given string of characters can be converted to a different value that would not allow the identification of a value, such as a person’s name. This can be very practical in industries with strong privacy standards.

Many ETL tools, like Fivetran and Hevo Data, have strong data transformation capabilities. This allows the user to start transforming data as it is sent to a destination like a data warehouse, speeding up data work or protecting privacy.

When a transformation tool is used in a data warehouse, it is often known as a data transformation layer, as it can be layered on top of that existing technology. Data transformation layers allow your team to work with the data in your data warehouse. Some users assume they can move straight to a business intelligence (BI) tool at this point, but BI tools have limitations if the data is not properly organized. Data transformation tools make data much more valuable and also make it easier for less technical users to perform analysis with a BI tool later on, democratizing data for your team.

Mozart’s data transformation layer runs on a SQL query editor and allows users to get started with the transformation process right away. With Mozart, you can decrease time to insight by over 70%. You can get to the heart of the matter, making changes to your data before it’s used downstream. In doing so, you create a universal source of truth that can help keep everybody on the same page and prevent confusion. This is critical to ensuring success with your data transformation process and, ultimately, saving time and money. Rather than wasting another minute on manual data processing, you can offload that task to an advanced platform that offers greater visibility.

Mozart Data’s transformation tooling also has strong automation capabilities, allowing you to set up data transformations to run when their dependent tables or synced or as often as you need, so you aren’t wasting computing resources (and thus money) transforming data that hasn’t changed.

Data integration in a data warehouse is typically focused on combining large amounts of data from different sources, such as transactional systems and external data sources, into a central repository for reporting and analysis. The data can be transformed and cleaned before it is loaded into the data warehouse.

There are various data integration techniques you can use with warehouses and databases. By becoming familiar with some of the most popular integration tools and techniques, you can manage your data more effectively. For example, if you already have everything in your data warehouse, you might want to combine data in two different tables for analysis. Data transformation tools and techniques could help you do that. You could write an ad hoc query with your transformation layer to make that happen and even automate it if you want to set it to run again, and so forth.

Resources