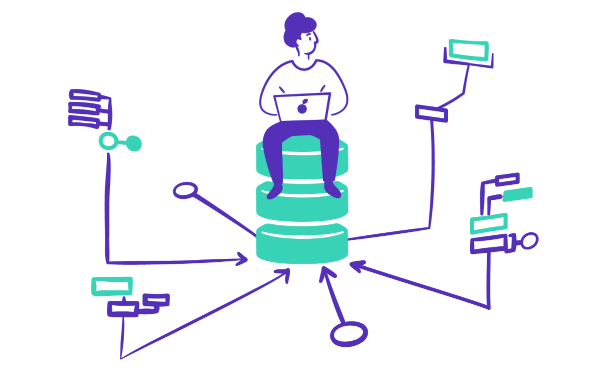

Data transformation tools make your data more manageable by performing tasks to organize it for analysis, such as joining disconnected sources. They work within your data pipeline to make sure your final data is ready for consumption by various teams, BI tools, or AI tools. Because they can have a major effect on the quality of your analytics and operations, transformation tools are a core component in a successful data strategy.

The purpose of transforming data is to make it easier to work with for data analysis. Without a data transformation tool, it requires manual work to prepare raw data for analysis, as it is burdensome to manually combine data from different sources or discover and address errors.

Combine datasets for analysis, including from different data sources (e.g. combining Salesforce data with payments data, or product usage data with Hubspot data)

Delete unneeded or duplicated data

Format data into a usable form

Unify formatting (e.g. date formats from data across sources)

Fix errors in datasets

Preparing data for AI/ML tools

Much of this can be summed up as cleaning and organizing data for analysis. One’s exact needs vary based on their goals and the types of impactful insights they’re searching for in the data. While data transformation is a key part of getting the most out of your data, it’s important to remember it is only one piece of your data stack.

Data extraction tool — Transfers data from sources to storage. Typically an ETL or ELT tool. Sometimes this portion of the process is referred to as a data pipeline or building a data pipeline. Depending on the need, some data transformation may be started in the extracting and loading phase.

Data warehouse — Stores data for analysis and enables analysis. Data warehouses are also designed for other tools to attach or “layer” onto them (e.g. data transformation tools, machine learning tools, business intelligence (BI) tools)

Data cataloging

Data transformation tool — Cleans, joins and edits data

Data analytics tools — Visualizes and interprets data. Most often a BI tool, but advanced data users may layer an AI data insights tool onto their data stack as well.

Transformation needs to work harmoniously with other data integration, storage, and analytic tools. In many cases, data transformation occurs as part of the data pipeline.

Data transformation is making changes to raw data to make it more useful. It can involve style, structure, addition, or removing data.

Aesthetic – standardizes style

Structural – alters columns or rows in a structured database

Constructive – adds or replicates data

Destructive – removes data that’s not needed

Individual use cases drive which types of data transformation are necessary. They can vary between only involving a style change to having major changes in content and structure.

Connecting two databases together

Combining data from two or more data sources

Aggregating data to show totals over a time period

Removing duplicate data (deduplication)

Placeholders are added for null fields to prevent errors

Removing identifying data in order to preserve privacy and meet compliance needs for HIPAA or GDPR

Preparing data from one application to be used in another application, like a BI tool

While data transformation supports improved analytics, it also boosts the quality of data shared with the rest of your team, most notably for people who are not data specialists and might not be used to preparing data for analysis. By helping to create a reliable database of record that can include customer data or transaction data, it can drive better-informed decisions for everyone in your organization. This is often referred to as a universal source of truth or a single source of truth.

A data transformation tool provides a way to automate and schedule updates to the content and structure of data. Data transformation tools can come with or without extract and load services attached, open-source or licensed, and involve code or no-code. If code is used, they come in a variety of different code languages.

Fivetran

Mozart Data

Talend

Portable

Stitch

Hevo Data

dbt

Microsoft Power Query

SAP Hana

Transformation capabilities are included in ETL tools that manage bulk file and database transfers. If you are moving databases full of data, you will need a transformation tool to help join it with other data sources and prepare it for use in analytics and/or operations. In cases where data transformation happens without extract/load services, it’s often a smaller data set and an open-source tool.

It’s worth clarifying that even if data is transformed during the ETL process, many organizations still want a tool like Mozart Data or dbt that allows them to transform data further in their data warehouse.

Using an open-source data transformation tool will save on upfront costs, but will cost extra in labor hours maintaining the data transfers and connections between your sources. The total cost of ownership of an open source tool may be higher, while reliability and learning curve are much steeper.

Low or no upfront costs

Maximum customization

May require expert staff

Hours of maintenance

Lack of support

Dedicated support

More pre-built transforms

Less in-house expertise required

Less customization

Cost of license

Next, let’s distinguish between transformation tools that work with code vs tools that do not require code. Transformation tools which rely on code to write transformations provide more options and more complex analysis but require more specialist knowledge to write and run. No-code tools have visual interfaces that allow anyone to use them but provide less flexibility.

For code-based data transformation tools, the main two languages are SQL and Python. Other options include R language and dbt syntax (dbt actually uses SQL plus its own syntax).

Python uses programming libraries to run queries. Libraries slow down Python, but also give it more options for processes; it can support machine learning, numerical routines, and mathematical operations. Python is almost only used by large, highly technical data teams.

SQLl uses functions to run queries. SQL is best at accomplishing core transformation tasks like combining multiple tables together in a single database. While it doesn’t have the advanced options of Python, SQL runs queries faster with fewer crashes.

SQL is also relatively easy to learn and use. This not only allows people with less technical backgrounds to become strong data users; it also enables data analysts and scientists to quickly get up to speed and collaborate with one another.

Data transformation is a key part of any data strategy, but just as important are ETL data integration tools. If you perfectly transform data, but don’t include all the sources you need, you are going to be running operations and analytics with huge pieces of the puzzle missing.

Data transformation can happen on its own, but it is often bundled with data extraction services that centralize data. This combined process of moving bulk data while transforming it is known as ETL.

ETL combines data transformation with data centralization. Its name stands for extract, transform, and load. Extract and load refer to the data transfer parts of the process. In ETL, data is transformed in a staging area before it is loaded to a final separate storage site like a data warehouse. ETL is designed with the intention to make data accessible and usable when it is done.

There is another option for bundled transformation and data integration services that is called ELT. In this arrangement, data transformation happens after data is collected which is why in its name, the ‘T’ for transform has been moved to the end. This change removes the need to pre-program transformations before connecting all data sources. This supports more creative uses of transformations after collection.

The major choice to make when using data transformation is where you want it to take place in the data stack. Your options are: while the data is being moved from its sources into storage (ETL paradigm) or after data has been collected (ELT paradigm).

ELT provides more flexibility with when transformations are applied and works well with all types of workloads. ELT is especially useful for large workloads because it allows users to do incremental transforms, saving on processing data until it is necessary. ETL is more traditional and works well with predictable data flows and cases which require processing before storage like privacy.

In ELT, tools that provide transformation after data is loaded into a data warehouse can be referred to as a data transformation layer.

Organizations don’t have to choose one or the other: it is common to use an ETL framework for repetitive data tasks when the transformation will perform the same function every time while using an ELT framework for new data work and data exploration.

The best data transformation tools can be divided into two main camps: standalone transformation tools and ETL tools. For most cases users with significant volumes of data and many data sources will be using ETL tools at some point in the process.

Standalone transformation tools transform data where it is already stored. Leading open-source data transformation tools include dbt data and Microsoft’s Power Query. They are both lightweight and effective at transforming data.

Dbt stands for data build tool. It’s a specialist tool that can build datasets for machine learning (ML), modeling, and operations. Data build tool uses the Jinja templating language to control SQL transforms.

Microsoft Power Query is an add-on for Microsoft Excel. Estimated to have up to 20,000 users, it runs simple transformations on Excel spreadsheets through a visual interface. Advanced users can dig deeper into the code by configuring transformations in Microsoft’s proprietary M language.

All-in-one modern data platforms like Mozart Data also include a data transformation layer in their collection of tools, giving their users maximum flexibility. Mozart Data’s data transformation tool is built on a SQL query editor.

While standalone transformation tools can occupy a niche role, for use cases involving multiple sources of data, an ETL tool is often the best tool for the job. Because they are purpose-built to combine transformation and moving data, ETL tools are very reliable. They can also greatly reduce labor hours on managing data integrations.

Why combine extract and load with transformation?

Interoperability

Reliability

Reduced maintenance and cost

If you have significant transformation or integration needs, it’s worthwhile to invest in a reliable ETL tool that will also address your data integration needs. Two tools that are most used for data transformation in this context include Fivetran and Stitch Data Loader, a Talend tool.

Fivetran offers straightforward, reliable ELT services for moving data to cloud storage locations including GCP, AWS, Azure, and Snowflake. Fivetran makes use of one of the best open-source tools, dbt core, to both track transforms and perform transforms as part of its ETL services.

Stitch Data Loader is another common choice. It is simple to implement and has strong customer support, although it can become costly as companies scale.

Between these extremes of single tools and complex yet insular ecosystems, there are tools like Mozart Data and Hevo Data that combine the data transformation features that work best for most businesses.

Mozart Data combines the best transformation and pipeline management tools with leading extract and load services from Fivetran in a ready-to-use data pipeline. Mozart gives users custom support for data sources via Portable and additional flexibility to transform data after it is collected in a Snowflake data warehouse.

By connecting all these best-of-breed transformation tools with managed ETL, Mozart removes the need for data engineers to build a complex pipeline. With Mozart the pipeline is inferred as it is assembled, so it is easier to manage and understand and requires no-code to connect new data sources. It can sound complicated to less experienced data users, but it isn’t — users can start connecting data and building transforms in minutes.

With no barriers to entry and no extra maintenance tasks — this is what a modern data platform should look like. Contact us to see how Mozart can instantly improve your data.

Resources