ETL and ELT Tools: Definition and Top Tools

Break down data silos and get your team the data they need

Today, data drives the decision-making process at organizations of all sizes. But the sheer amount of data at a business’s disposal can far outstrip its ability to comprehend it all manually, especially at scale.

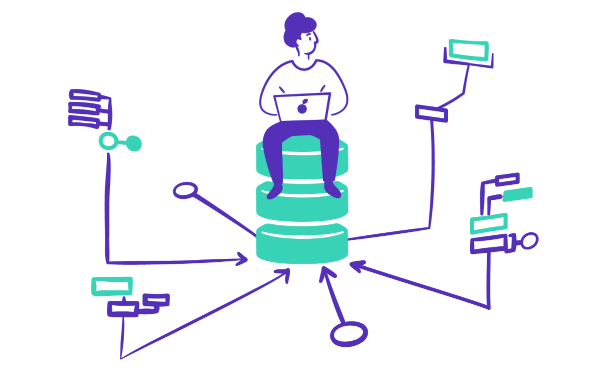

Organizations rely on data integration to make sense of it all. ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) tools facilitate data-informed growth by aggregating data from various sources and priming it for analysis inside target destinations, like data warehouses, databases, and data lakes.

Both ETL and ELT tools do data extraction. But the reversal of the “load” and “transform” steps has bearings on the data integration process.

ETL tools represent the traditional approach. An ETL data pipeline consists of an intermediary platform that transforms data into a target repository’s format. Then, the transformed data is loaded into the target for further analysis. ETL tools are often best suited for the movement of structured, repeatable data and batch processing.

But in an ELT architecture, raw data is first loaded directly into a target system, like a data warehouse or data lake, without first undergoing transformation. The upshot of this process? Raw ELT data can be stored in the target system for future ad-hoc or on-the-fly transformations. This is useful for surfacing new analytic angles with the same dataset.

ELT represents a more recent evolution of the traditional ETL data integration method. But both pipelines have distinct advantages, disadvantages, and use cases. Let’s unpack these differences further to understand their impact on tooling.

The primary difference between the ETL and ELT data pipelines? The time and place transformation occurs.

Traditional ETL (extract, transform, load) data integration involves merging data from various sources for staging. Staging and transformation take place in an environment separate from the target location.

In the staging area, scripting languages like SQL shape data via a series of transformations into a format the target system can use. Transformations might include cleansing, standardizing, and validating the data. Finally, the transformed data is loaded into the target system, where it’s ready for further analysis.

On the other hand, ELT (extract, load, transform) is a data integration process that involves loading structured or unstructured data directly into a target data warehouse or data lake. Then, transformation of data takes place in the same environment where data is stored.

For businesses dealing with large quantities of data, ELT is a more flexible option. With ELT, analysts can repeatedly query the same untransformed data in response to shifting priorities and variables.

The rise of cloud-based infrastructure has largely enabled the development of ELT data integration. Transformation is resource-intensive, but cloud-based databases like Snowflake leverage their processing power and storage capacity to easily make transformations at scale.

The data strategy of your business will depend on factors like resourcing, target system requirements, and the data itself.

Some scenarios where ETL might make sense include:

For other situations, ELT might make more sense:

Depending on your business’s needs, a mix of ETL and ELT tools can be beneficial. Also of note—most tools do both ETL and ELT. But if you’re looking to form a consideration set of the best ELT tools for data extraction and load, look no further. This list includes options for both subscription-based software/platforms and open-source ELT tools.

Fivetran is a best-in-class, cloud-based ELT tool for automating data extraction, loading, and in-warehouse transformations. With automated data integrations and querying-ready schemas, Fivetran is a natural choice for teams looking to automate away repetitive data pipeline workflows. Its connectors ensure data integrity and reliability by adapting to changes in APIs and schemas, and data analysts will benefit from Fivetran’s continuous data synchronization between sources and target warehouses.

Featuring more than 450+ data source connectors (as of April 2023), Portable empowers businesses to build custom ELT integrations that capture “the long tail” of business intelligence. Supported data sources run the gamut of SaaS applications, including CRMs, marketing analytics tools, and ticketing systems. Finally, it loads data into all of the databases and warehouses you’d expect, including Snowflake, BigQuery, and Redshift, and Azure. Portable typically complements another core ELT tool.

Hevo Data provides near real-time visibility for teams leveraging multi-source data for actionable insights. The versatile platform features 150+ pre-built integrations across Cloud Storage, SaaS Applications, Cloud Databases, and more. More than 1000 companies prefer Hevo.

If you’re not afraid to throw some dev time at deploying an in-house solution, Airbyte’s free open-source tier lifts some of the burden with an array of ready-to-go connectors.

Airbyte also reaps the benefits of a large contributor community — often, when API sources or schemas change, the community will have already made the necessary connector changes for you. But the open-source tier will also require debugging without the assistance of a dedicated support team.

An ELT tool acquired by Talend in 2018, Stitch is another open-source option for data extraction and load. Stitch is a cloud-based platform known for its data ingestion capabilities and is used by over 3,000 companies. It connects to all major databases and data lakes, and offers open-source connectors via the Singer toolkit, with the possibility to build any that are missing.

Data is generated in many ways. Smart devices in factories capture machine sensory data at the edge. Businesses create and update client info in CRMs. Would-be eCommerce shoppers generate cookie and tracking data when they browse stores online.

The purpose of any data pipeline is to move data from its starting point to places where it can be interpreted by data consumers looking to capture insights. ETL/ELT makes this possible by building a pipeline through which essential data is captured, transformed, and loaded onto repositories for further examination by business intelligence or analytics tools.

Businesses essentially have three options when it comes to setting up a pipeline of reliable ETL/ELT data:

While it’s possible to make numbers 1 and 2 work, they’re both tedious, lengthy, and resource-intensive options. Going with an external tool, whether subscription SaaS or open source, is the straightest path toward modernizing your data stack. It’s also the easiest way to automate the process for additional benefits.

An automated data pipeline is an essential part of the modern data stack. Some reasons why include:

From extraction and load to data transformation, automation helps businesses make the most out of their pipelines.

Different ETL/ELT use cases mean no single data integration method is right for your business all the time. Are ETL or ELT tools right for you? The short answer: it depends.

Ready to learn how an automated ETL/ELT data pipeline can drive growth for your business? Get in touch with our team.

Resources