Helping a customer take a 16-hour query to just 5 minutes

Mozart Data is a full-service modern data platform designed to help customers centralize, organize, and analyze their data. To many of our customers, especially those without full-time data teams, the most valuable part of our service is the expert data analyst support we offer.

Our analysts help customers with everything from getting set up on the platform to tackling specific projects that require technical expertise. Recently, one of our customers came to us for help tracking a core growth metric much more efficiently.

The problem

Our customer came to us with a problem: they have a core metric that they need to be able to monitor closely, but that requires them to write a query on their 1.7B row events table. The good news is our customer knew where to look for what they needed. The bad news is that this query took 16 hours to run.

There are a number of potential problems that accompany a 16-hour query, like how difficult it is to manage your data efficiently if there are any issues in your data pipeline. If you have a data quality error feeding into that events table, your time-consuming query will have run on unreliable data, and it takes too long to just run it again.

But for many companies, the more important issue is that these long queries are expensive to run. Our customers use Snowflake data warehouses and most of the time the majority of their bill comes from computation (or “compute”), which is essentially the computing power needed to run the query. With Snowflake, compute costs are determined by query runtime. This is more cost-effective than determining costs based on the amount of data being processed during computation — when queries are written efficiently. In the event of a very long query, this can rapidly become very expensive.

Our customer knew there was likely a way to make this query more efficient, but they didn’t know how to do it themselves. So, they came to the Mozart data analyst team for help.

How Mozart helped solve it

Solving data problems is a lot more complicated than just learning some SQL. It requires an understanding of why the question is being asked and how the data that contains that answer is structured.

Understanding their business context

Our data analysts are great at what they do, but they can’t solve all of our customer’s problems for them. It’s always important to understand the “why?” behind a project, and the data everyone is working with, so that the right business question can be answered with the data. This is where collaboration is so important.

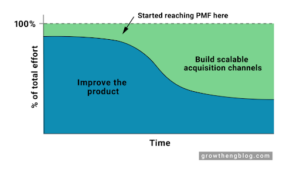

What our team learned is that the primary metric the customer cared about was weekly active screen viewers, because habit of use is a key indicator that users are getting value from their service. It’s how they know their growth is sustainable.

In this case, that required our analysts to understand the events used to define a habit of use, as well as the expected output of monitoring this. This customer wanted to notify their Growth team of these behaviors, so they could understand if initiatives are increasing engagement and preventing users from churning, or if they needed to adjust their strategy.

Optimizing the query

With an understanding of the business context, our team set out to optimize the query and save our customer valuable resources.

The first step was rethinking the computation method. Studying the events they wanted to measure gave our analysts an idea of how frequently those events are reflected in the source table, and thus how much they could reduce the data when extracting it. If every row reflects the event, you have to pull every row and there’s not a good reason to extract that data. Our analysts determined that was not the case, so there was definitively some room for refinement.

Events that happen a lot can also be a signal. If the user is taking the action in question 50 times in a week, but we only care about it happening at least once in a week, we can get a 50x reduction in the amount of data we have to scan to get the answer. Scanning less data = faster, cheaper queries.

By being frugal with the data extraction process, we were able to make a quicker pass over the 1.7B relevant rows and grab just the atomic events we needed. At this point, our analysts could construct the metric from the reduced set of input data — essentially making a second pass over the extracted events, without asking the query to parse through all of the superfluous information again. This allowed a query that used to take 16 hours to run in just 5 minutes.

While constructing that final query, our analysts looked for any adjacent use cases our customer had that required the same data. Using one clean, reliable dataset for more than one purpose is a great way to keep query costs lower.

This project is an excellent example of the value of having access to expert data professionals, whether on your team or through Mozart Data. They can supercharge slow queries while finding additional opportunities to make data work more organized and efficient. And in the case of working with Mozart, you aren’t responsible for paying for a full-time database expert — you just have access to them, and they can even show your team how to solve problems more efficiently in the future.

If you’d like to learn more about how Mozart can help your company with all of your data needs, schedule a demo or check out our case studies page.