Modern Data Stack Architecture

How modern data tools allow organizations to centralize and organize their data for effective analysis

TL;DR:

Modern data stack architecture is a vital blueprint for managing, organizing, and interpreting the vast array of data that businesses encounter daily. This complex system represents a shift from traditional data structures that rely on on-premise storage and data processing and analytics tools that are prone to siloes and hinder collaboration, like Excel.

A complex system doesn’t inherently mean it’s inaccessible. Modern data stack architecture requires some technical expertise (that companies like Mozart Data have, making a managed offering available), but also make it easy to automate all data work in one cohesive framework.

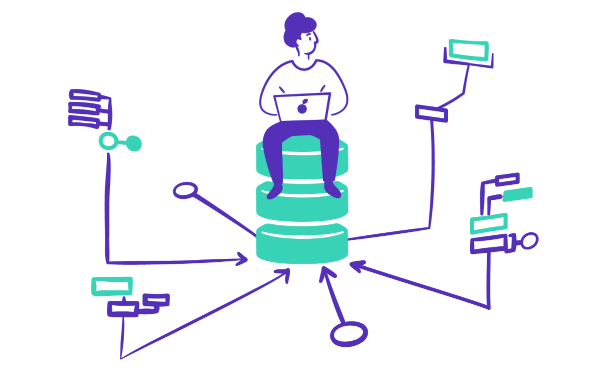

Modern data stack architecture comprises multiple layers of technology interacting seamlessly to provide robust data processing capabilities. A data stack represents the entire system, starting with the data sources and ending with the visualization or business intelligence tools.

Key principles guiding the modern data stack landscape include scalability, flexibility, security, and efficiency. Scalability ensures that the system can handle increasing volumes of data without hindrance, while flexibility allows for adaptation to various data formats and sources. Security is paramount in protecting sensitive information.

Perhaps most importantly, the evolution of the modern data stack has greatly improved organizations’ ability to handle complex data workflows and revolutionized how they interact with data. Through the integration of various tools and technologies, organizations can now manage data in previously unimaginable ways.

Innovation in the modern data stack landscape continues to shape the future of data management. It promises to be a driving force in understanding and leveraging data in future years as more and more organizations of all sizes adopt the technology.

The world of big data is growing exponentially, and with it comes the need to derive actionable insights from diverse data sources. The foundational aspect that makes this possible in any organization is the ETL process. ETL stands for extract, transform, and load, an essential element of modern data stack architecture.

Here’s a more in-depth look at what this process entails:

ETL in modern data stack architecture plays multiple roles, including:

Data warehousing plays a vital role in modern data analytics and business intelligence. When considering the evolution of data management, it’s easy to draw parallels between traditional storage houses and warehousing, where digital information is stored, refined, and served as needed.

In the modern data stack, a data warehouse is more than just a storage unit — it’s a consolidated digital reservoir of an organization’s information assets. Given the disparate sources of data businesses handle today, they’re faced with the challenge of finding actionable insights in the noise. Impactful analysis almost always requires companies to be able to combine multiple data sources. An example would be a SaaS company that needs to combine marketing campaign performance, sales touchpoints, payment solutions data, and product usage data for a complete user experience pipeline. A data warehouse allows users to access these insights by bringing data to a single location.

A warehouse also serves as a single source of truth. By centralizing data into one repository, a data warehouse ensures that each department and stakeholder looks at and works with the same information set. This resolves inconsistencies and fosters a data-driven culture where decisions are based on a unified understanding of the data.

Warehousing also plays a critical role in promoting data reliability. A collection of centralized data creates a universal source of truth. This gives businesses accurate, up-to-date, and consistent information across the board.

Solutions like the Snowflake data warehouses have further revolutionized the domain. Snowflake offers near-infinite scalability and a performance-optimized architecture. Tools like these elevate the very concept of data warehousing by aligning it with the needs of modern businesses.

Data transformation is often likened to the refining process that turns raw ore into precious metal. In data analytics, it’s the step that converts raw data, with all its quirks and irregularities, into a more usable format, paving the way for meaningful insights and decision-making.

A data transformation tool is designed to automate and optimize the transformation process. Its primary function is to ingest raw data and, through a series of operations, reshape it into a format conducive to analytics. This usually involves converting data types, rescaling values, or translating terms.

This is also where data from multiple sources can be combined. It is much more effective than simply copying and pasting data from multiple CSVs into a single Excel file or Google Sheet. That still requires a user to manually get values to match, like pairing user IDs in a sales tool with a tool used for marketing touch point. That example is relatively simple when compared to bringing in additional data sources in the scenario above.

But transformation isn’t just about format and structure—it also encompasses operations that ensure data’s accuracy and relevance. Within the data transformation layer, functions like data cleansing occur, an essential component of any analytics process. Cleansing pertains to the identification and rectification of errors within the data.

The modern digital ecosystem is not about a single data stream, however. Today’s businesses leverage multiple platforms and channels, each producing its own data stream. Combining data from various sources is thus necessary for efficiency and creating a single source of truth. Data transformation ensures that data from each source is harmonized into a consistent format.

The inherent limitations of in-app reporting in various data sources further underscore the need for data transformation. While these tools offer valuable insights within their narrow domain, they cannot answer cross-domain questions and analyze impactful data. A marketing tool might tell you how many visitors you had, but it can’t tell you how many visitors made a purchase unless combined with sales data.

Business intelligence (BI) encapsulates the strategies and technologies employed by enterprises for the analysis of business data. A business intelligence tool is a software solution specifically designed to analyze, visualize, and report on complex business data.

Here’s why BI tools are so essential to the modern data stack:

A shift is happening in data analytics. Enterprises are moving away from traditional, clunky solutions in favor of modern data stack tools. These tools are uniquely designed to handle the complexities and requirements of contemporary data management and analysis.

Mozart Data is a modern data platform that simplifies data integration, transformation, and visualization for businesses. Embracing the most recent advancements in data stack technologies, Mozart Data is an all-in-one data platform that eliminates the need to juggle multiple tools or hire expensive data engineers to manage connections between them.

The data platform as a service model that Mozart Data employs allows for reduced operational overheads, greater scalability, and the assurance of always having access to the latest features and security protocols. The platform makes it easy for users to leverage a host of modern data stack tools to gain a holistic view of their operations and unify data to simplify use.

As the data demands of modern businesses grow in volume and complexity, the need for platforms that can handle this surge becomes evident. With its forward-thinking approach, Mozart Data is the ideal solution for enterprises navigating modern data management challenges.

Ready to get started? Schedule a demo today to learn more about Mozart Data and how you can transform the way you manage your data.

Resources