Businesses are rapidly adopting business intelligence (BI) tools to get a better view of the health of their business. As a result, more and more companies are realizing the value of insights derived from their BI tools is also dependent on data quality and are looking for ways to improve data quality — including researching data cleansing tools.

We’ve introduced the concept of data quality at an abstract level, but what is clean data? One way to think about data quality in the context of business intelligence is “garbage in, garbage out”. The quality of the results is only as good as the quality of the data consumed by your BI tools. For a more specific answer to the question, “Why is clean data important?”, it might be helpful to look at a few examples.

Let’s say that you have customer data from three different teams in your organization with the possible variations below:

*Name* variations:

*Address* variations:

*Date* variations:

Introduce the possibility of abbreviations in addresses and dates and the number of variations can increase substantially.

This might seem like a trivial problem — what are the odds that all of your teams are going to be on a different page with this? Why can’t we just tell them to unify their practices?

If you don’t believe us on this, talk to your teams. It’s quite likely they’re going to have valid reasons for why there will be at least some data inconsistencies.

Data warehouses predominately use SQL (Structured Query Language) databases. Each name, address, and date are stored in a field in the database. The definition for these fields is called the schema. SQL databases are organized as tables, where each column is a field, and each row is a record.

If you want to combine multiple data sources (which is required for more complicated analysis like cohorting your customer/user base, analyzing product data with payments data, enriching marketing campaigns, etc.), you need the data to be compatible. Minor variations in format require you to manually fix them in order to combine data sets and can entirely break more complicated data models.

So, if databases, ad platforms, CRMs, etc. that your different teams are using have different variations of the name, address, and date as specified above, you’re going to run into a problem if you try to consume said data with a BI tool.

Following are three common data cleaning tasks:

Whether you ask, “What is data cleaning?” or “What is data cleansing?”, your BI tools need clean data to produce quality insights.

There are a handful of ways to approach using data and cleaning it properly. Traditionally, companies and users have tried to work with data in separate, siloed tools that create that data (think ad platforms, CRMs, product databases, social media platforms, etc.). There are limitations, however, to working with the data in these tools, so data users (whether they’re actual data analysts or are any other operator at an organization who wants to work with data) often want to move the data into a BI tool for more efficient and complex analysis and visualization.

What this process doesn’t account for is how clean or dirty the data is before it reaches that BI tool. If the data isn’t clean, users must spend a great deal of time manually cleaning it by resolving inconsistencies, reformatting inputs (e.g. changing entered mm/dd/yy date formats to match mm/yy date formats), identifying potentially incorrect outliers, and correcting errors.

Additional problems arise with this process when analysis requires data from more than one source. These data sources likely vary in format, schema, and other inconsistent characteristics.

Cleanup is frequently done by exchanging comma-separated value (CSV) files between ETL tools and spreadsheets like Excel or Google Sheets, where manual cleaning can occur. That data is then loaded into a data warehouse, a large data repository. This process is called extract, transform, load, or ETL.

While manually cleaning data from various sources can work, it costs you time and resources, and data quality is not guaranteed, as you reintroduce the possibility of human error in a repetitive process. If you’re attempting to drive your business forward with business intelligence and data mining, it simply isn’t practical.

Every company wants a better solution than manual data cleansing, but many organizations are unsure how to move forward with data work and tools, especially if they don’t have many members of the team with advanced data backgrounds.

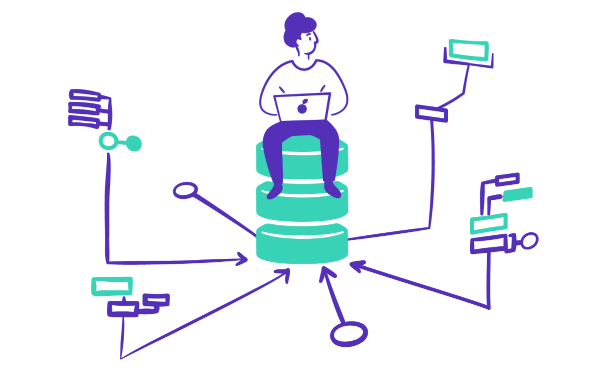

To address these problems, Mozart Data developed an out-of-the-box modern data stack, which is a set of technologies enabling an organization to centralize, organize, clean, and operationalize their data. This provides any organization with the capabilities of a full data engineering team, without the requisite experience and financial resources to hire and manage a data engineering team.

Tools in any modern data stack include:

The ETL tools, data warehouse, transformation capabilities, and additional automation comprise a modern data platform. We like to think of the modern data platform as the logical evolution of the modern data stack, where more tasks can be automated and fine-tuned to provide a better user experience, so users can focus on analysis and operationalizing insights, not solving data problems. The result should be an automated data pipeline controlled from one tool, providing your team with the clean, reliable data they need to perform their work.

ETL tools include a data cleaning tool/data cleansing tool.There is no distinction between data cleansing vs data cleaning. The terms are used interchangeably.

If you want to keep up with the fast pace of business today, you need modern data tools that give you the power and flexibility to leverage your data. Mozart Data’s modern data platform can put that power in your hands.

Organizations with large quantities of data have employed a wide range of data cleaning techniques. For data cleaning in data science, data scientists often employ custom solutions like Python data cleaning tools or tools implemented in the R programming language. These approaches, of course, require more high-value resources than many businesses can justify.

Businesses have traditionally been more spreadsheet-focused, where spreadsheets like Excel, for example, are the preferred tool for manipulating business data. Data cleaning in Excel is usually a tedious and error-prone manual process, although you can automate it to some degree with macros or VBA (Visual Basic for Applications). Sharing spreadsheet data is typically done via CSV files.

We’ve touched on data warehouses, but let’s get deeper.

Data warehouses, where your BI data resides, are essentially column-oriented SQL databases, as we previously discussed. This is where ETL tools come in.

Let’s review the E-T-L steps:

Extraction tools can import your data from various sources. Data transformation tools then take care of the data cleaning process, cleaning and merging your data into a single source of truth. The transformed SQL data is then loaded into your data warehouse.

With a data warehouse in place, you can also transform that data further in the actual warehouse. This is often to perform an “ad hoc query”, where a user wants to search for the answer to a new problem (as opposed to a standard transformation you always need to run on the data, which is often run during the ETL process). In the context of data cleansing, however, you might run a new data transformation when you’re combining data with a new data source for the first time and formatting needs to be adjusted, or if you find that a previous process missed something for a particular dataset. What’s important to understand is this transformation ability is really flexibility and adaptability for data-driven organizations small or large.

Implementing the data transformation process using either the Python or spreadsheet approaches has trade-offs.

(Note that Mozart’s platform can operate on already loaded data, so you could extend the process to E-L-T or E-T-L-T.)

Whether you perform data cleaning manually or with automation, it is one component of an overall data strategy. In the interest of the long-term health and profitability of your business, you should develop a company culture that commits to data quality. This includes testing and validation at various stages as data moves through the pipeline.

Regarding data validation vs data cleaning, the terms are sometimes confused. Validation confirms the quality of data after the cleaning process.

Concerning data cleaning, there are data cleaning steps that are generally accepted best practices.

Identifying the type of data or data fields that are necessary for a specific project or process is the first step in data cleansing. It helps to know how your data is structured. In layman’s terms, you don’t always know what you don’t know, so understanding your data is going to make life much easier.

After identifying the relevant data fields, data is collected, sorted, and organized.

As soon as the data has been collected, inaccuracies are resolved, and duplicate values are identified and removed.

Data cleaning tools search each field for missing values and then fill in those values to create a complete data set and avoid information gaps.

For a data cleaning process to be effective, it should be standardized to be easily replicated for consistency. Ideally, this requires participation and commitment throughout your company.

Periodically review the process and resulting data. Validate data quality and adapt when necessary. Data cleaning is done by the data transformation tool, so testing and validating should be performed at that stage and before loading.

Every data tool has its strengths and weaknesses. The Mozart Data platform allows you to combine the power of these tools with ease of use. Anyone proficient in SQL can quickly and easily set up data testing and validation.

Choosing the best data cleaning tools depends on your needs, data types, and specific requirements.

Some factors to consider include:

What are your requirements?

Suppose you are a researcher with engineering expertise available and need data cleaning tools for data science. In that case, you may prefer an ad-hoc approach using a programming language like Python or R. This option gives you maximum flexibility to meet special requirements that may not be offered in an off-the-shelf tool.

On the other hand, startups, SMB, and enterprise companies may have BI needs that require a higher level of automated data work. In this case, there are turnkey solutions that satisfy a breadth of their requirements.

In the universe of data cleaning tools, open source tools can satisfy a mix of requirements between turnkey and custom. While these tools can be used out of the box, the source code is available for customization. However, these tools aren’t recommended for inexperienced users — or users that don’t have more time on hand to manage a tool.

Below is a list of some of the best data cleaning products available. These products offer various other ETL features in addition to data cleaning. (The list is in no particular priority and does not reflect ranking.)

Mozart Data utilizes Fivetran as one portion of the modern data platform because of its broad capabilities and reliable performance. Additional capabilities are provided via a proprietary SQL-based data transformation layer, enabling ETL, ELT, or even ETLT processes for moving and organizing data. Want to see how Mozart actually works? Take an interactive product tour here. Prefer a demo with someone from Sales and a data analyst? You can schedule that here!

Resources